Meritocracy vs Topocracy

The world isn’t always fair. Perhaps you know the frustration of pouring your heart into making something extraordinary, only to see it almost completely ignored by the crowd. On the other hand, celebrities are constantly talked about, even when they are ostensibly doing very little — if anything at all. Your clever lyrics and innovative musical composition lie in the obscure shadow of a pop idol singing “let’s go party” over the same riff used by dozens of clones. Is it just you, or is there an actual force causing this to happen? This is an interesting question I decided to study together with Clara Vandeweerdt.

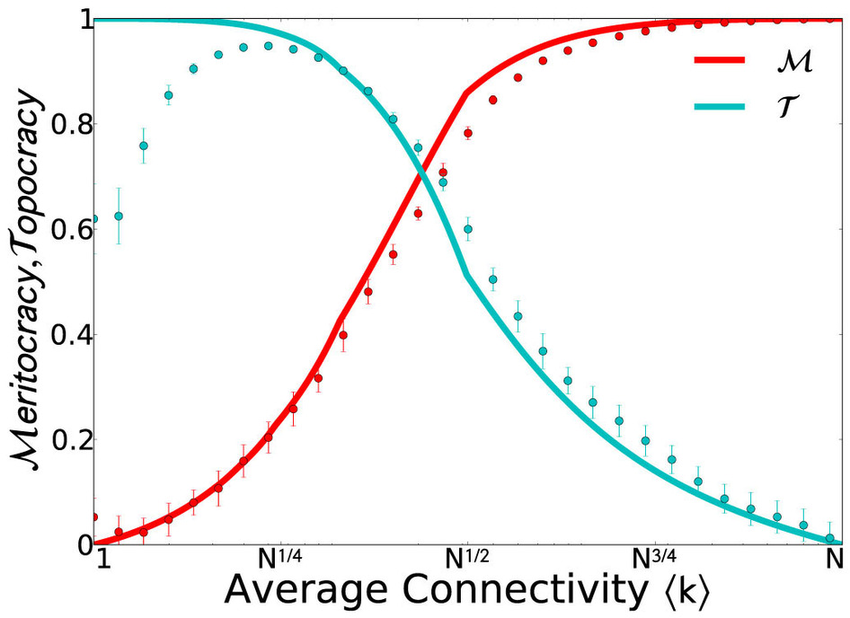

The result was a paper titled “Posts on central websites need less originality to be noticed,” recently published on Scientific Reports. The attempt here is to try and disentangle the roles of meritocracy and topocracy. Meritocracy is a regime in which success is determined by merit: the best products win on the market. Topocracy is a term coined by Borondo et al. to signify the situation in which your position in the market determines success. If you are a central hub — a celebrity — what you do is already watched by a lot of people. Getting those eyeballs is arguably the hardest part of succeeding, and if you’re famous you have inherited them from the past. Topocracy explains why, for instance, many fields are crowded with the offspring of a past celebrity — e.g. 8 out of 20 current Formula 1 drivers are sons of professional or amateur drivers (the rest are mostly sons of generic rich people, another form of topocracy).

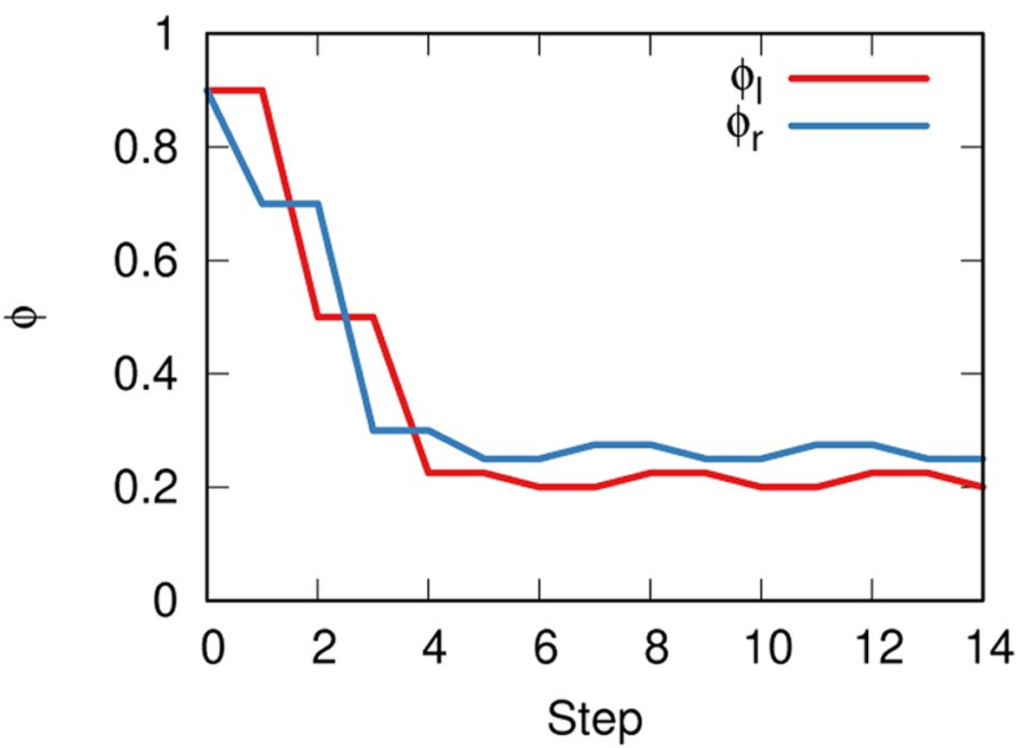

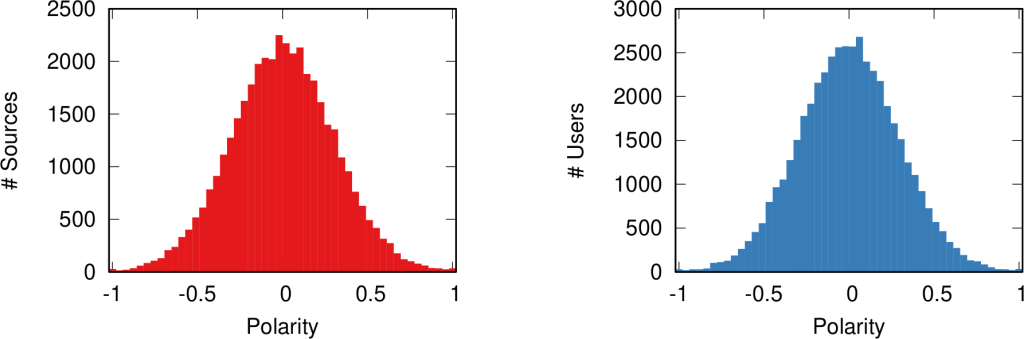

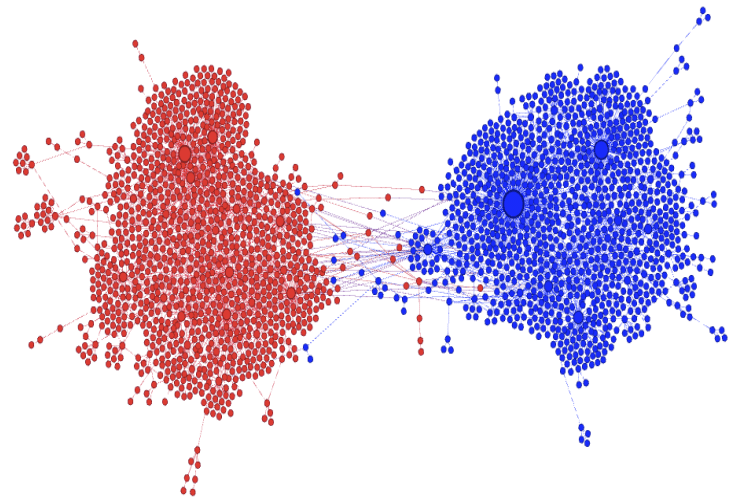

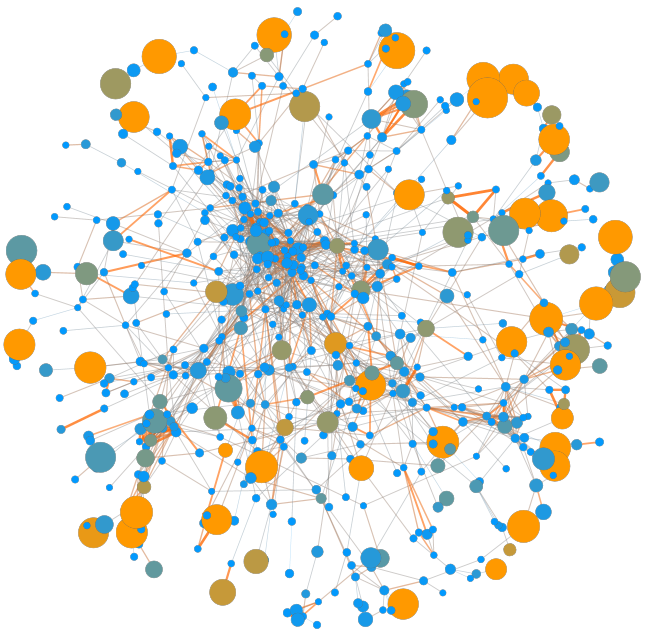

To study the tension between meritocracy and topocracy, we needed to narrow down the scope to make a scientific experiment possible. We decided to focus on tens of millions Reddit posts. The objective was two-fold. First, we asked what was the role of meritocracy and topocracy in influencing probability of either being noticed by somebody — i.e. attracting at least one upvote on Reddit. Second, we asked the same question about succeeding — i.e. ending up in the top 10% most upvoted posts on Reddit. To do this, we needed to define what “meritocracy” and “topocracy” meant on the platform.

To us “meritocracy” on social media means to produce quality content. Estimating the quality of a Reddit post independently from its upvotes is hard. We decided to focus on originality, under the assumption that original content should catch the audience’s attention. In practice, we measured how surprising the words in the post’s title are. More surprising = more original.

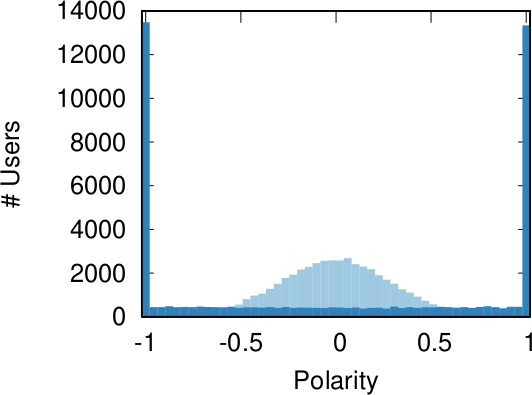

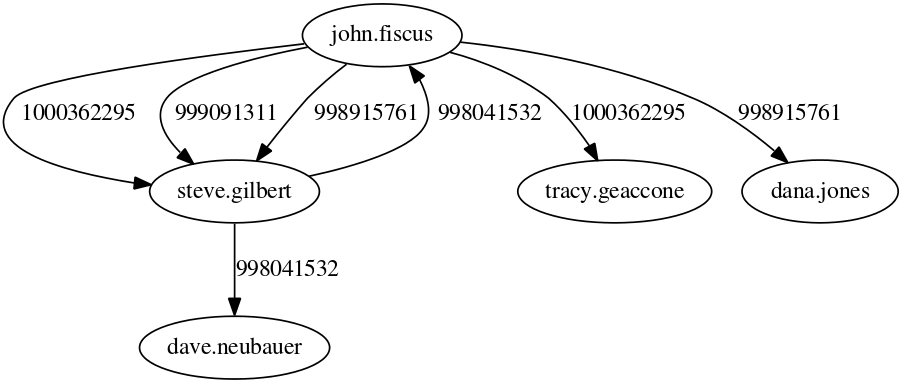

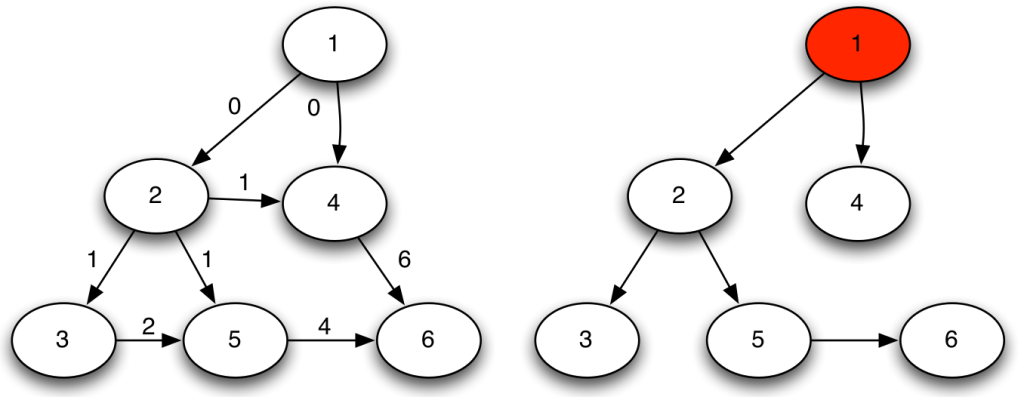

“Topocracy” on Reddit would involve how central in the network of content-creation a post is. Reddit (fortunately?) does not have an underlying social network, so we had to look at the website used to make the post: is this funny GIF coming from imgur.com or gfycat.com? This is convenient, because websites live on a network of hyperlinks, and this makes us able to estimate their centrality.

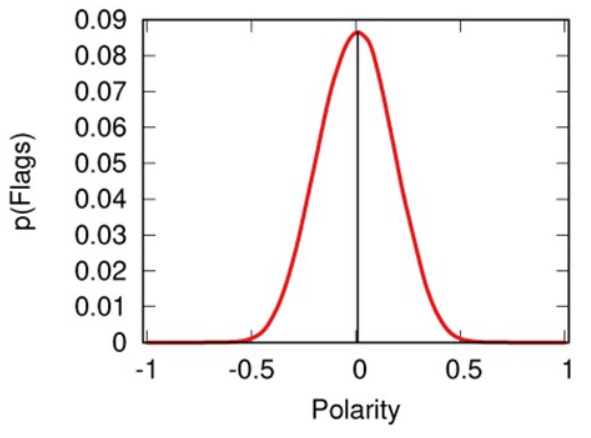

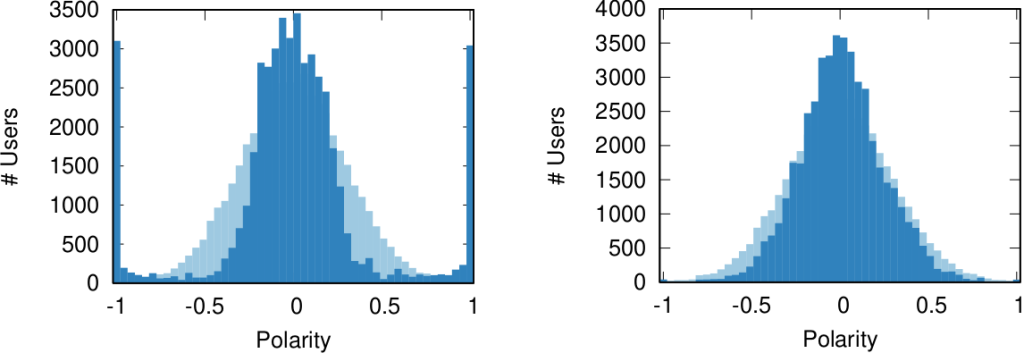

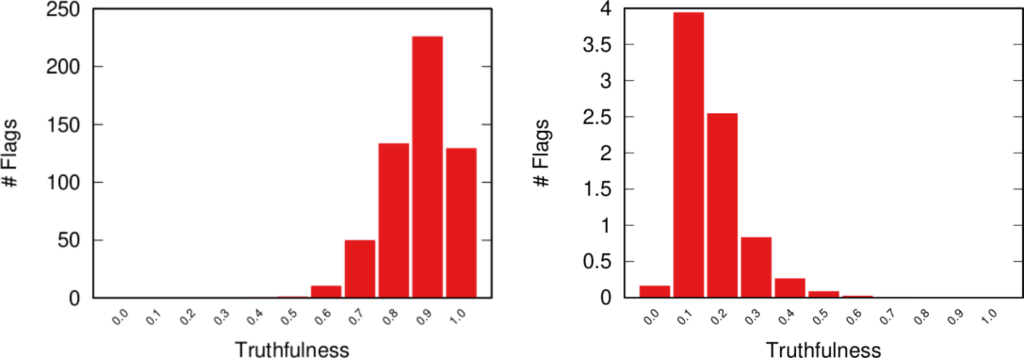

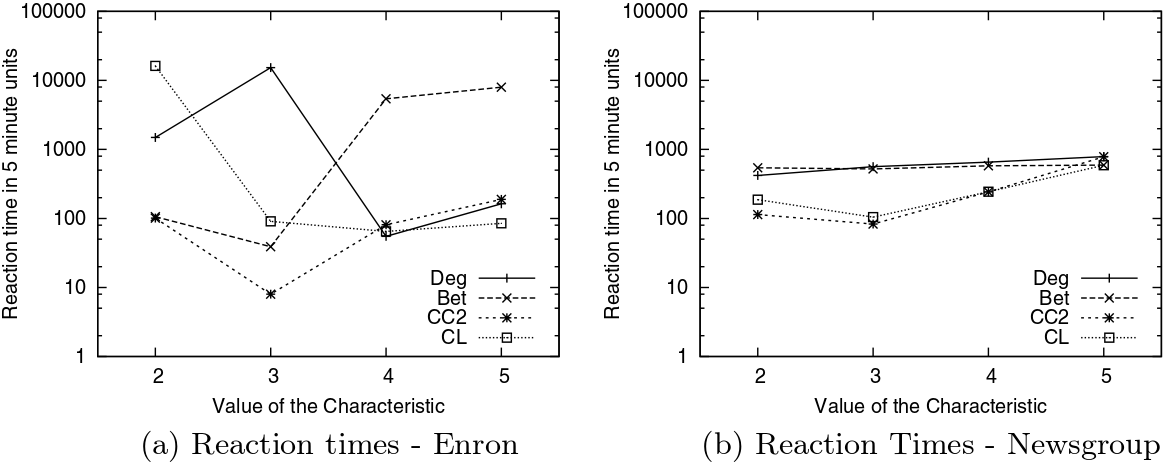

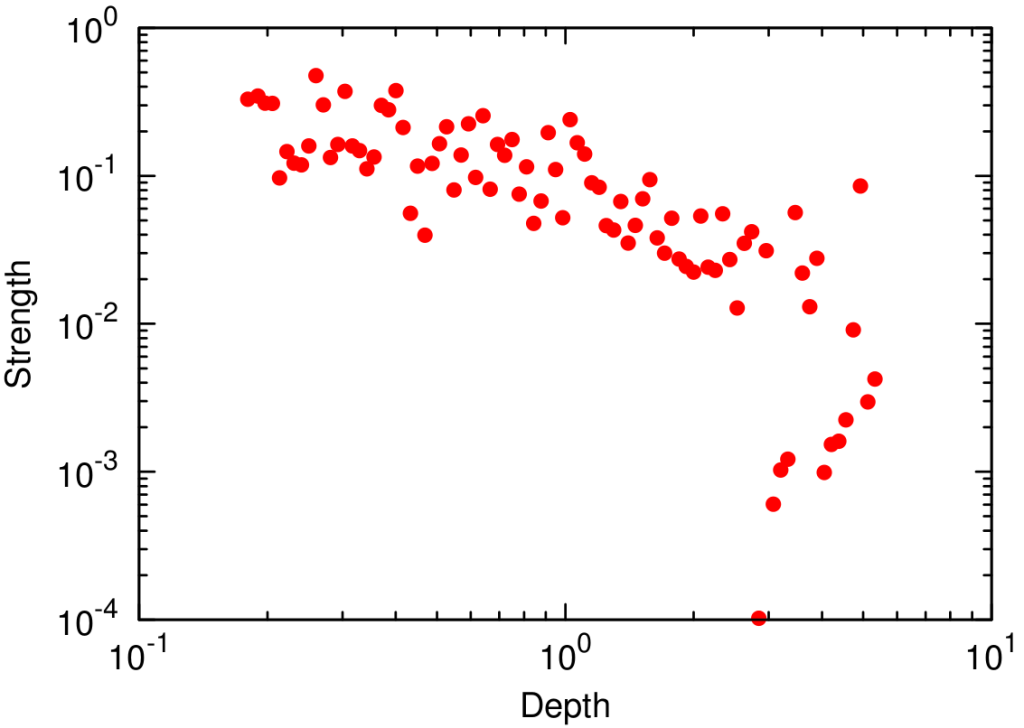

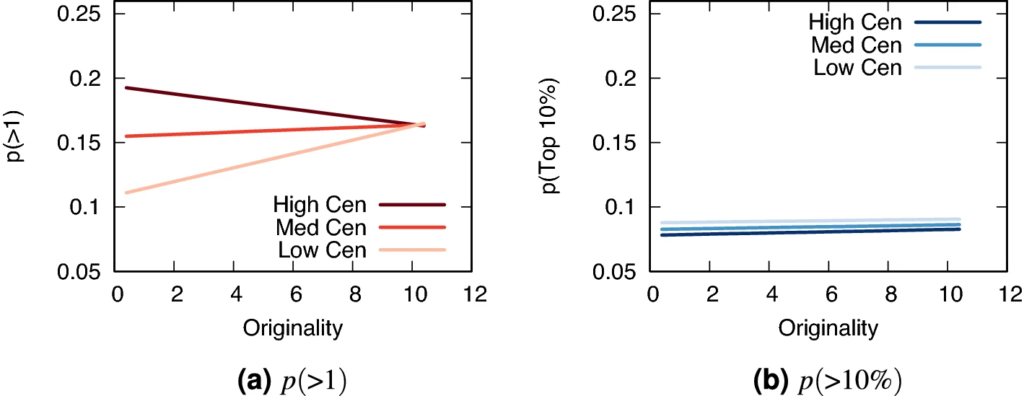

The results were interesting. Our first question is about getting noticed. Here we see that, if you are not using a central website to make your content, you need to be original — outsiders need to put that extra effort to see their merits rewarded (faint red line in the image below, left panel). The opposite is true for central players: here originality is actually harmful (dark red line). If you’re central, you need to play it safe.

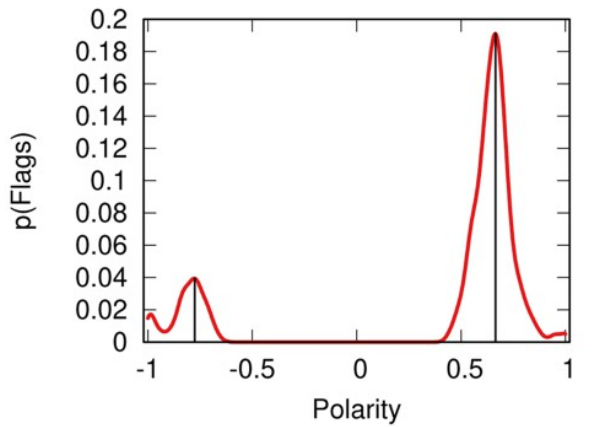

These results do not hold when it comes to the quest of becoming part of the top scoring posts in Reddit. In this case, originality doesn’t play a role no matter the centrality of your platform (right panel in the image below, all lines are equal and flat, showing no effect no matter the centrality).

There are tons of caveats in our research. It is not a given that originality means quality — especially since we measure originality via linguistic analysis. A title in complete gibberish is highly original, but likely of low quality. Moreover, you need to assume that original content (the thing linked by the Reddit post) comes with an original title (the text the user writes to describe the linked content). Then there is the questionable relationship between the centrality of the website you used versus your own centrality as a potential superstar poster on Reddit — Gallowboob comes to mind. We detail in the paper why we think these concerns are valid, but they do not undermine the interpretation of our results too much.

This is relevant for the broad community studying the success of viral ideas on social media. The accepted wisdom is that the content of a post doesn’t play that much of a role in its success in spreading — other factors like its starting position in the network, its timing, etc. are the only things that matter. I’ve struggled with this notion in the past. With this paper we show a much more complex picture. Maybe the role of the content is underestimated, because it interacts in complex ways with the other studied factors, and it is linked not with success per se, but with the ability to avoid failure — being completely overlooked.

In summary, if you’re a celebrity it’s good and desirable not to put too much effort into making highly original content. Your fan-base is the reason you’ll be successful, and they already liked you for what you did in the past — straying from it might be more damaging than not. On the other hand, if you start from the periphery, you need to put in extra effort to distinguish yourself from everything else out there. The problem is that this striving for originality and high-quality content will not guarantee you success. At most, it will guarantee you’ll not be completely overlooked.