Data-Driven Borders

What defines the human division of territory? Think about it: cities are placed in particular areas for a number of good reasons: communication routes, natural resources, migration flows. But once cities are located in a given spot, who decides where one city ends and another begins? Likewise, who decides on the borders of a region or a nation and how? This decision, more often than not, is quite random.

Sometimes administrative borders are defined by natural barriers like mountains and rivers. This makes practical sense, although it is not always clear why the border should be that particular mountain or that particular river. In fact, the main criterion is usually historical: it’s because some dynasty of dudes conquered that area and then got lazy and didn’t go on (this may be the official version: unofficially, maybe, it’s because they found somebody who kicked their asses all day long, just like the complicated relationship of the Romans with the Parthians).

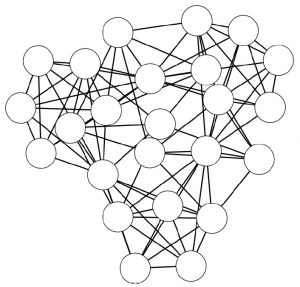

Of course, the borders of states or regions are sometimes re-arranged to better fit practical administrative purposes. In any case, these are nothing else than sub-optimal adjustments of a far-from-optimal process. Network analysis can be useful in this context, because it can provide an objective way to divide the territory according to a particular theory (and it can provide pretty pictures too).

The theory here is very simple: two territories are related if a lot of people travel regularly from one to the other. If people constantly travel back and forth between two territories, then it probably makes sense to combine these territories into one administrative unit. So, how do we determine which territories should be merged, and which shouldn’t be? This problem is easily solvable in network theory, because it contains a network in its very basic definition: two areas are strongly connected if many people travel from one to the other. What we aim for is a grouping of territories. This looks really familiar to the eyes of some readers of this website: grouping nodes in a network. Yes! Community discovery!

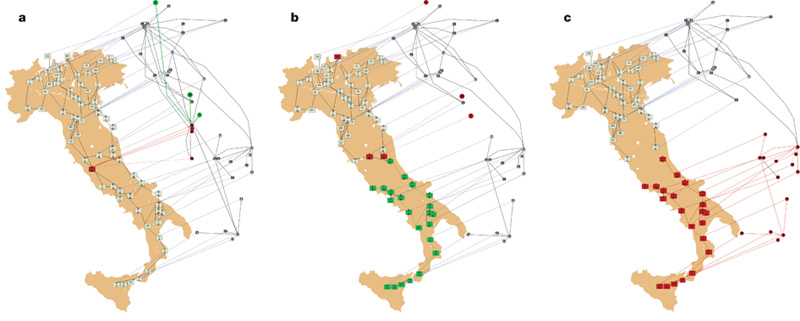

I am not claiming to be the first one to see the problem this way. There is a number of people who already worked on it: the two most important that I can think of are Brockmann et al. and Ratti et al. However, I am reporting this because I also have a paper on the topic. And, of course, I think it’s better than the alternatives, for a number of reasons that I won’t report because it’s boring for non nerd people. But then again, I am a narcissist, so I can’t resist giving you the short list:

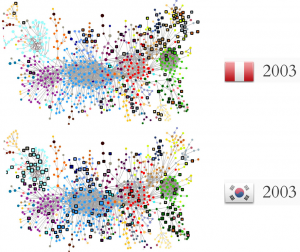

- The previous works are based on not so perfect data: Brockmann et al. work with the banknotes trajectories recorded by the “Where’s George?” website (an awesome idea, take a look at it), while Ratti et al. use cellphone mobility data. Both are not exact representations about how people move and contain critical error terms. In our work, we use GPS trajectories with very high frequency and precision: we are studying the real thing.

- The previous works use outdated methods for community discovery which cannot detect small communities: we use a more up-to-date method that is considered the state-of-the-art of community discovery. For example, in Brockmann et al. the entire west part of the United States is apparently one single area, grouping California and Montana and creating a region of 60-something million people.

- We actually create a framework that establishes the correct methodology to approach the problem in general, instead of just studying one particular case.

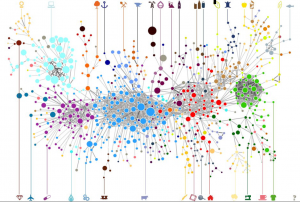

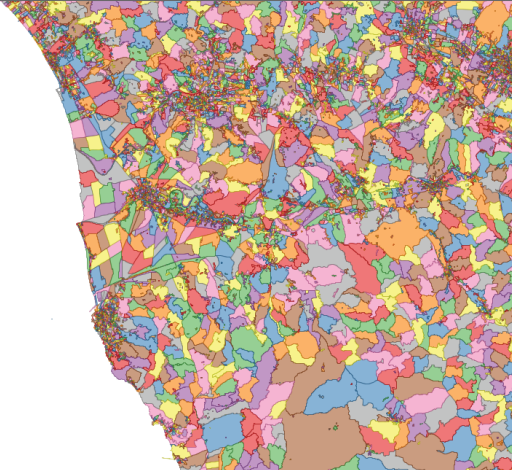

But enough blabbering! I promised pretty pictures and I’ll give you pretty pictures. The general shared methodology is the following (in the pictures, the example of mobility in Tuscany, Italy):

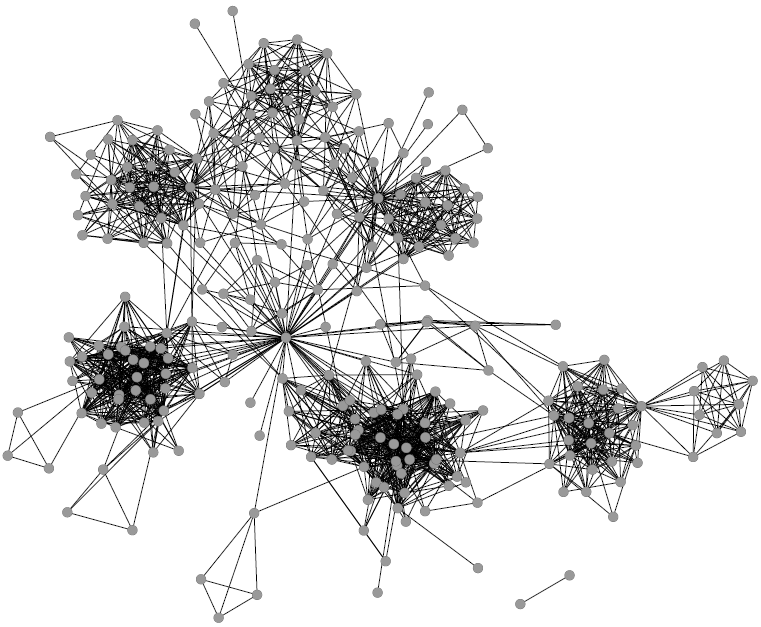

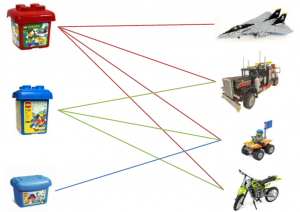

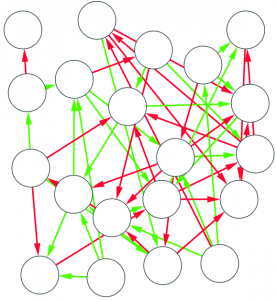

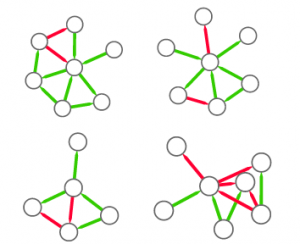

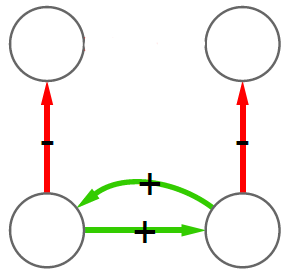

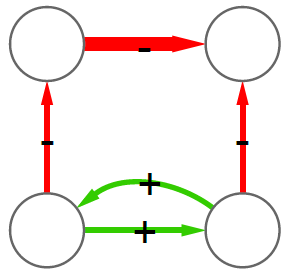

1) We divide the territory in cells (either a regular grid or very fine grained census cells);

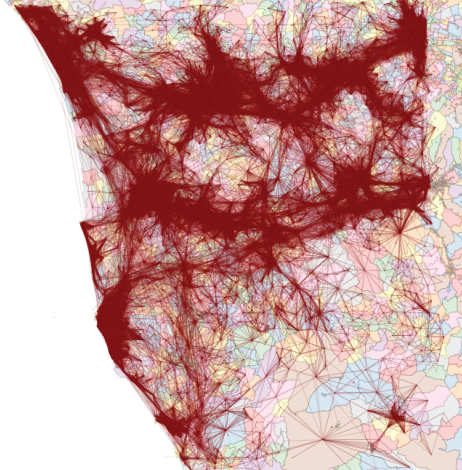

2) We connect the nodes according to how many cars went from one cell to the other;

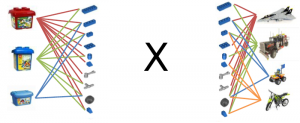

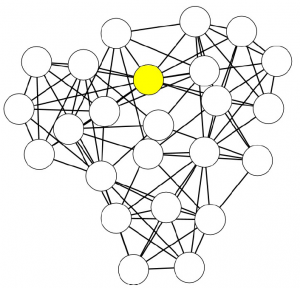

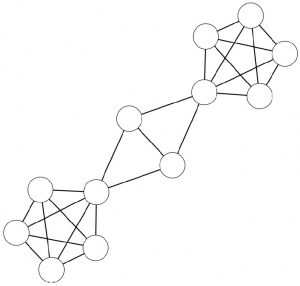

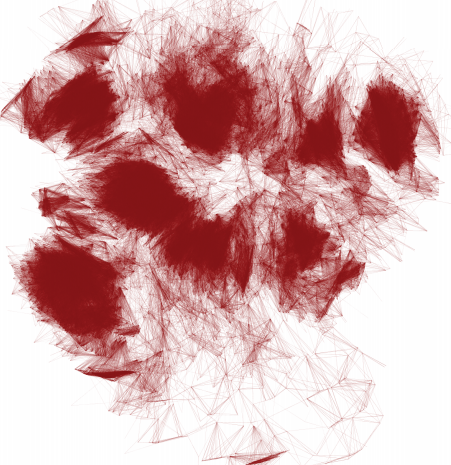

3) We forget about geography and we obtain a complex network (here, the node layout has nothing to do with their location on the map);

4) We apply community discovery, grouping set of nodes (territories) that are visited by the same people;

5) We put the nodes back in their geographical positions, obtaining the borders we were yearning for.

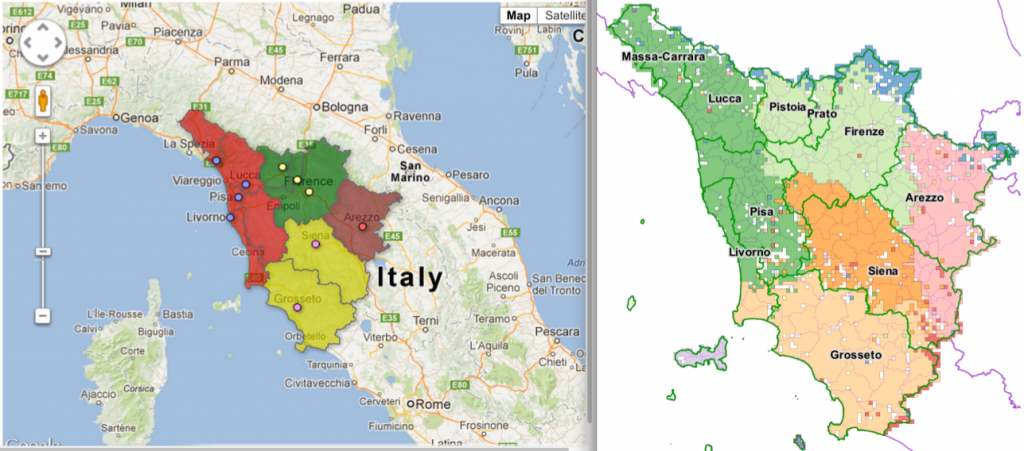

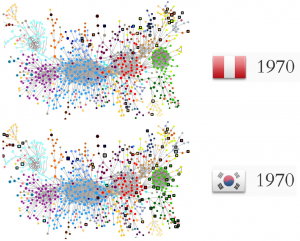

Funnily enough, Italy is undergoing a re-organization process of its regions and provinces. The results in Tuscany are very similar to the insights of our work (not perfectly similar, as the current process is just a merge of the existing provinces and not a real re-design):

On the left the new provinces (colors) on top of the old ones (lines), on the right our clusters (click for a larger resolution).

The match suggests that our data-driven borders follow the general intuition about what the borders should look like. However, they are not just a blind merge of the existing provinces, such as the one made by the policy-makers, making them more connected with reality. Hurrah for network analysis!

I am an associate prof at IT University of Copenhagen. I mainly work on algorithms for the analysis of complex networks, and on applying the extracted knowledge to a variety of problems.

My background is in Digital Humanities, i.e. the connection between the unstructured knowledge and the coldness of computer science.

I have a PhD in Computer Science, obtained in June 2012 at the University of Pisa. In the past, I visited Barabasi's CCNR at Northeastern University, and worked for 6 years at CID, Harvard University.

I am an associate prof at IT University of Copenhagen. I mainly work on algorithms for the analysis of complex networks, and on applying the extracted knowledge to a variety of problems.

My background is in Digital Humanities, i.e. the connection between the unstructured knowledge and the coldness of computer science.

I have a PhD in Computer Science, obtained in June 2012 at the University of Pisa. In the past, I visited Barabasi's CCNR at Northeastern University, and worked for 6 years at CID, Harvard University.